《Introduction to Infiniband for End Users》 阅读笔记。

1. Basic Concepts

1.1. InfiniBand vs traditional networking

传统的计算机网络

- “network centric” view,重点关注在硬件以及其管理上

- 应用程序共享的硬件资源统一由操作系统管理,无法 direct access

- 网络通信时,数据包每次需要复制 2 到 3 次:I/O -> anonymous buffer pool -> Application’s virtual memory space

- byte stream-oriented,传递字节流,一条消息完整地传递至对端时,接收端可能会触发多次系统调用以读取完整的消息

- 数据中心网络可能由三个独立的网络组成

- one for networking

- one for storage

- one for IPC

InfiniBand

- “application centric” view,为应用程序提供 messaging service,重点关注在便于应用程序之间简单、高效的通信上

- direct access, stack bypass,可以不依赖操作系统

- avoiding operating system calls

- avoiding unnecessary buffer copies

- message-oriented,传递消息,单个消息大小可达 $2^{31}$ bytes

- 发送端 InfiniBand 硬件自动将 outbound message 分成多个 packets 传递至接收端应用程序虚拟缓冲区

- 只有在接收端收到完整的 messsage 时才会通知应用程序

- 可以发现,在传递消息的过程中,不需要发送端和接收端应用程序参与

- InfiniBand 会统一处理 storage, networking 和 IPC I/O,因此只需单一底层网络即可

1.2. InfiniBand Architecture

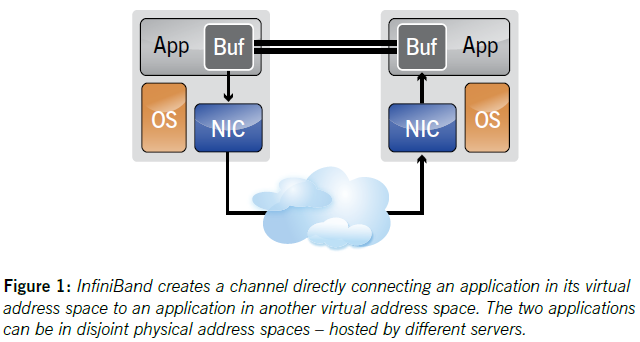

InfiniBand 通过在应用程序之间创建 channel 连接 (故可称为 Channel I/O) 以提供 messaging service 进行通信,如下图所示:

Queue Pairs(QPs): 指 channel 的端点,每个 QP 包含一个 Send Queue 和一个 Receive Queue- 通过将 QPs 映射为应用程序的虚拟内存空间,以实现应用程序直接访问 QPs

- InfiniBand 提供两种 transfer semantics 用于传递消息

- channel semantic:

SEND/RECEIVE- 接收方预先在自己的 Receive Queue 中定义数据结构

- 发送方无需知道接收方 RQ 中的数据结构,仅负责调用

SEND发送消息

- memory semantic:

RDMA READ/RDMA WRITE- 接收方在自己的虚拟内存空间中注册一个 buffer,并将控制权交予发送方

- 发送方通过调用

RDMA READ或RDMA WRITE读写该 buffer

- channel semantic:

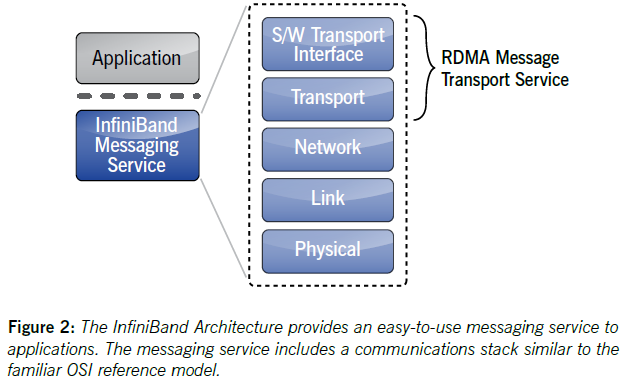

InfiniBand Achitecture 如下图所示:

SoftWare transport interface层包含创建/管理 channel 和使用 QPs 传递消息的 APIs and librariesInfiniBand transport层提供 reliablity & delivery guarantees,类似 TCP transportNetwork层类似于 IP 层Link&Physical: wires & switches

1.3. Hardware Components

为了获取 InfiniBand Architecture 所提供功能,需要以下特定硬件的支持:

-

Host Channel Adapter(HCA)- 将 InfiniBand end node 连接至 InbiniBand network

- 提供 address translation mechnisms,以让应用程序可直接访问物理内存

-

Target Channel Adapter(TCA)- 嵌入式环境下专用的 channel adapter

-

Switches- Designed to be “cut through” for performance and cost reasons and they implement InfiniBand’s link layer flow control portocol to avoid dropped packets

-

Routers- 可将规模较大的网络切分为多个子网,为 InfiniBand network 提供可扩展性

- 用来连接物理距离较远的两个 InfiniBand subnet

-

Cables and Connectors

2. InfiniBand for HPC

InfiniBand 架构的特性可为 HPC 带来以下好处:

- Ultra-low latency for

- Scalability

- Cluster performance

- Channel I/O delivers:

- Scalable storage bandwidth performance

- Support for shard disk cluster file systems and parallel file systems

3. InfiniBand for the Enterprise

Devoting Server Resources to Application Processing

- 虚拟化只是提升了服务器资源的利用率

- 使用 InfiniBand 由于避免了无必要的系统调用和内存拷贝,可将服务器资源充分用于 Applications

A Flexible Server Architecture

- 现有服务器会将 I/O 带宽资源按照一定比例预分配给 storage fabric(HBA) 和 ethernet network(NIC),可能并不适合应用程序所需

- InfiniBand 则会统一处理 storage, networking 和 IPC,无需对 I/O 带宽资源进行预分配

4. Designing with InfiniBand

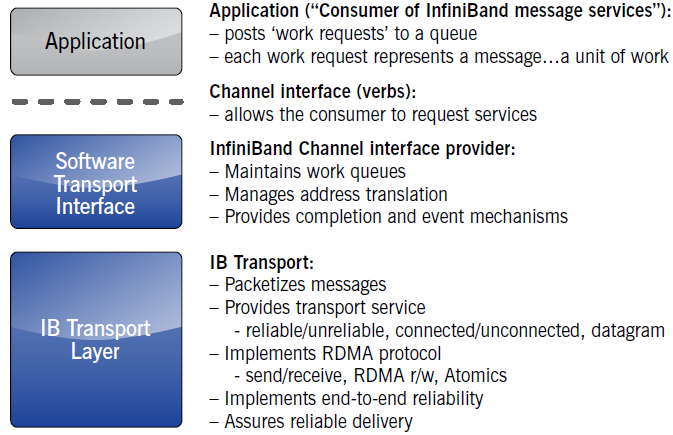

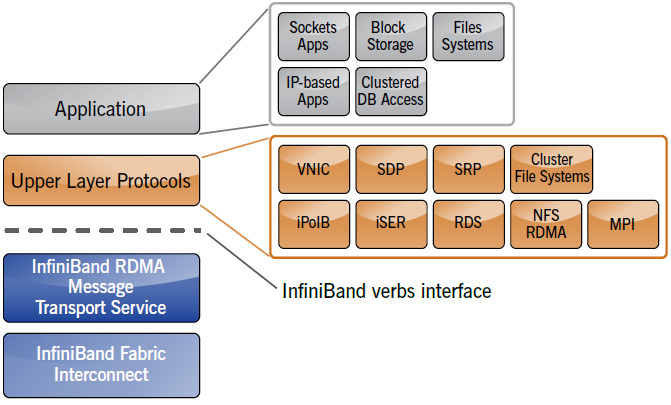

如下图所示,应用程序通过使用 verbs 将 work request 置于 work queue (也就是 QPs) 中。

verbs只是应用程序使用/管理 InfiniBand 服务的规范,而非具体的 APIs.

实现 InfiniBand 所需的软件部分大致可分为以下三类,全部源码可从 OFED 获取。

- Upper Layer Protocols(ULPs) and associated libraries

- mid-layer functions: 用于配置和管理底层 InfiniBand Fabric,并为 ULPs 提供所需的服务

- hardware specific device drivers

ULP 有两种接口:

- upward-facing interface: 供应用程序调用

- downward-facing interface: 通过 QPs 使用底层的 InfiniBand messaging service

OFED 提供了多种 ULPs 以满足应用程序的不同需求:

SDP: Sockets Direct Protocol. This ULP allows a sockets application to take advantage of an InfiniBand network with no change to the applicationSRP: SCSI RDMA Protocol. This allows a SCSI file system to directly connect to a remote block storage chassis using RDMA semantics. Again, there is no impact to the file system itself.iSER: iSCSI Extensions for RDMA. iSCSI is a protocol allowing a block storage file system to access a block storage device over a generic network. iSER allows the user to operate the iSCSI protocol over an RDMA capable network.IPoIB: IP over InfiniBand. This important part of the suite of ULPs allows an application hosted in, for example, an InfiniBand-based network to communicate with other sources outside the InfiniBand network using standard IP semantics. Although often used to transport TCP/IP over an InfiniBand network, the IPoIB ULP can be used to transport any of the suite of IP protocols including UDP, SCTP and others.NFS-RDMA: Network File System over RDMA. NFS is a well-known and widely-deployed file system providing file level I/O (as opposed to block level I/O) over a conventional TCP/IP network. This enables easy file sharing. NFS-RDMA extends the protocol and enables it to take full advantage of the high bandwidth and parallelism provided naturally by InfiniBand.Lustre support: Lustre is a parallel file system enabling, for example, a set of clients hosted on a number of servers to access the data store in parallel. It does this by taking advantage of InfiniBand’s Channel I/O architecture, allowing each client to establish an independent, protected channel between itself and the Lustre Metadata Servers (MDS) and associated Object Storage Servers and Targets (OSS, OST).RDS: Reliable Datagram Sockets offers a Berkeley sockets API allowing messages to be sent to multiple destinations from a single socket. This ULP, originally developed by Oracle, is ideally designed to allow database systems to take full advantage of the parallelism and low latency characteristics of InfiniBand.MPI: The MPI ULP for HPC clusters provides full support for MPI function calls.

5. InfiniBand Architecture and Features

5.1. Address Translation

- HCA 占有一块物理地址,应用程序可通过内存注册过程请求必要的地址转换,然后由 HCA 使用地址转换表执行所需的虚拟地址到物理地址转换

- I/O channel 由 HCA 创建,QP 可以理解为应用程序访问 HCA 的接口,单个 HCA 可支持最多 $2^{24}$ 个 QPs

得益于 HCA 提供地址转换功能,可实现 InfiniBand 的两个核心功能:

- 用户态访问 InfiniBand messaging service

- 使用 InfiniBand messaging service 可“直接”访问其他应用程序的 virtual address spaces

- 使用对端提供的 key 和 virtual address,执行

RDMA Read/RDMA Write操作

- 使用对端提供的 key 和 virtual address,执行

5.2. The InfiniBand Transport

InfiniBand transport 可提供以下几种 message transport services:

- Channel semantic operations: a reliable or an unreliable

SEND/RECEIVEservice,类似于 TCP/UDP- 可靠性由 transport recovery 和 notifies application 机制实现

SEND/RECEIVEoperations 常用于传输 short control messages- 执行流程:

- 接收端应用程序使用

Post Receive Requestverb 将 WRs 置于 RQ,其中每个RECEIVE WR代表应用程序虚拟内存空间中的一块 buffer - 发送端应用程序使用

Post Send Requestverb 将 WRs 置于 SQ,其中每个SEND WR代表一条消息,SEND操作的目标为接收端 RECEIVE WR 关联的 buffer

- 接收端应用程序使用

- Memory semantic operations:

RDMA ReadandRDMA Writeservice- 常用于传输 bulk data

- Atomic Operations

- Multicast services

5.3. InfiniBand Link Layer Considerations

- InfiniBand link layer lossless flow control:利用硬件检测接收端缓冲区使用量,仅在可容纳消息时才会传递消息,保证消息不会被丢失

- TCP lossy flow control: 并不会在发送前检测下游是否可容纳 packets,因此 packets 可能会丢失,事实上 TCP 还会利用丢包信息判断下游状态

5.4. Managment and Servcices

与 autonomous Ethernet fabric 相反,InfiniBand 为集中式管理,其管理主要分为以下几个方面:

- Subnet Management(SM) and Subnet Administration(SA): 发现、初始化和维护 InfiniBand fabric

- Communication management: 将一对 QPs 关联以便在两个应用程序之间创建 channel 连接

- Performance management

- Device management

- Baseboard management

- SNMP tunneling

- Vendor-specific class

- Application specific classes

6. Achieving an Interoperable Solution

可以不看

7. InfiniBand Performance Capabilities and Examples

2010 年的文档,注意时效性

throughput

- server to server: $40 Gb/s$

- switch to switch: $120 Gb/s$

latency

- application: $1 \mu s$

- swicth: $100 ns$ to $150 ns$

当前数据可查看 InfiniBand Roadmap